Overview

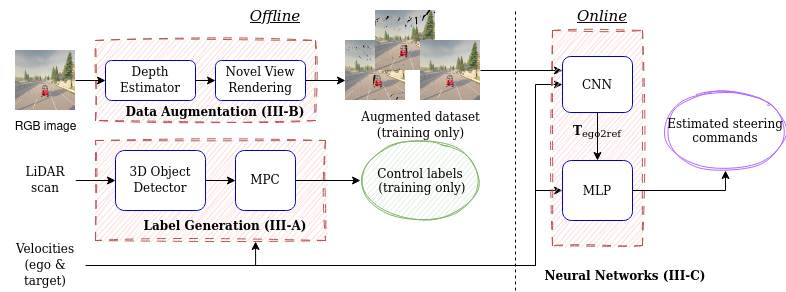

Our proposed framework consists of preparatory offline steps (left) and online pipeline (right), which is conducted respectively during training and testing of our system.

Left: Firstly, we perform label generation using Model Predictive Controller. MPC takes location and orientation of the target with respect to the ego-vehicle estimated by a LiDAR-based 3D detector. Secondly, data augmentation is conducted by utilizing estimated dense depth maps for novel view synthesis.

Right: Our learning method is trained to predict accurate lateral and longitudinal control values by leveraging obtained labels, augmented image dataset and velocities of both ego and target vehicles. During test time our approach requires only RGB image sequence and velocities as input to control the ego-vehicle.